Larrabee

We first started hearing about Larrabee before the Fall 2007 Intel Developer Forum and then Intel CEO Paul Otellini revealed some details on the chip at the event.At the time, Intel said that it wasn't really looking at Larrabee as a discrete graphics card solution for gamers, stating that it had applications in super computing, financial services, physics, health applications but also said that it would be good at graphics... from a workstation perspective.

We felt that there was more to it than that - after all, why would Intel have acquired Havok--the leading physics middleware developer--if it wasn't looking to move into the gaming market? Larrabee is a discrete graphics product with other applications, just like Nvidia's GeForce 8 and 9-series cards that all support CUDA - a programming environment that enables developers to utilise the parallelism of Nvidia GPUs in applications other than games.

Since then, we have been trying to get to the bottom of the almighty Larrabee puzzle and I think we've finally got there... at least to some extent, anyway.

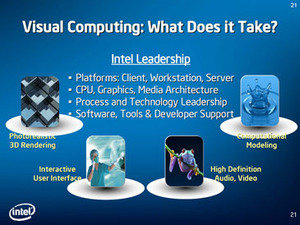

Intel sees Larrabee as a 'catch all' for visual computing - Smith said that Larrabee "is the best architecture for tackling visual computing problems." Undoubtedly, that's a bold claim to make, but if anyone's going to make that kind of claim and deliver, it's probably going to be Intel--at least based on recent history.

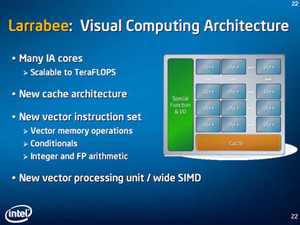

Intel’s discrete graphics product will be built on Intel IA technology with many smaller cores (possibly between 16 to 24, each with 16 in-order threads according to Ars Technica) and based on the x86 instruction set. Steve Smith confirmed that Larrabee will use a wide SIMD design, but the company hasn't gone into details on the gory architectural details. What we do know though is that it is scalable to teraFLOPS - there's no doubt that some ideas from the Terascale research project will probably be expanded upon in Larrabee.

Smith said that it is designed to be a “programmable x86 machine” with extensions including vector instruction sets (memory ops, conditional and integer/arithmetic). Each core also has local cache (currently an unspecified amount) in addition to a global cache, along with a texture sampler and a rasteriser option, meaning that it will eventually compete with Nvidia and AMD in the graphics market.

Smith said that it is designed to be a “programmable x86 machine” with extensions including vector instruction sets (memory ops, conditional and integer/arithmetic). Each core also has local cache (currently an unspecified amount) in addition to a global cache, along with a texture sampler and a rasteriser option, meaning that it will eventually compete with Nvidia and AMD in the graphics market. To quote the company, “Intel will have a competitive graphics product [in 2009]”, although we believe that early market launches will be designed for workstation and HPC applications. It will also be built “using Intel’s leading process technology” which we assume to be 45nm and beyond.

Talking about power budgets, Intel revealed that Larrabee would have a similar power budget to existing discrete GPUs instead of CPUs. To us at least, that almost makes it a certainty that this will be an add-in card, especially with the proposed schematics in the linked Ars Technica article.

What really seals the deal is the fact that Intel is expanding its software development tools to enable support for the Larrabee Architecture... and that includes supporting industry-standard APIs (like DirectX and OpenGL). We know that this is something strange to say about a company known for its relatively poor integrated graphics chipsets (at least, from a gaming perspective - it's all that game developers seem to moan about these days... aside from PC gaming dying of course), but Intel will be taking the fight to Nvidia and AMD in the discrete graphics market soon. We frankly cannot wait, because it'll hopefully spice up the market once again.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.